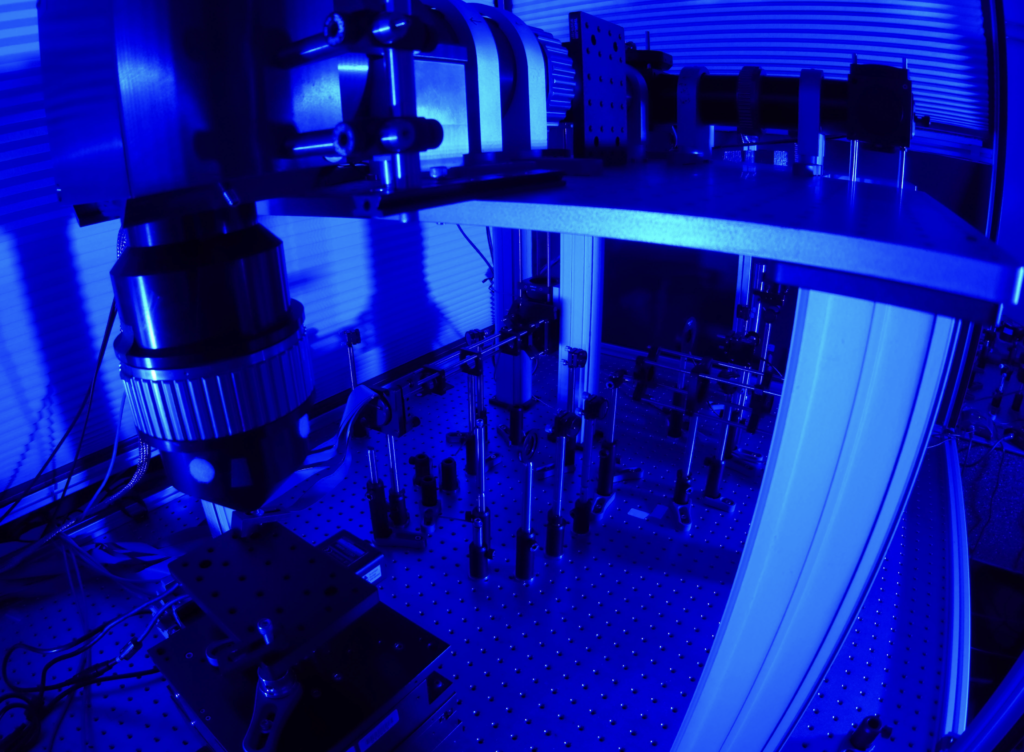

Robot arm with a 2p scope attached

Thorlabs has worked with the great Jacob Reimer to create a streamlined 2p system that is compact enough to mount on a robotic arm.

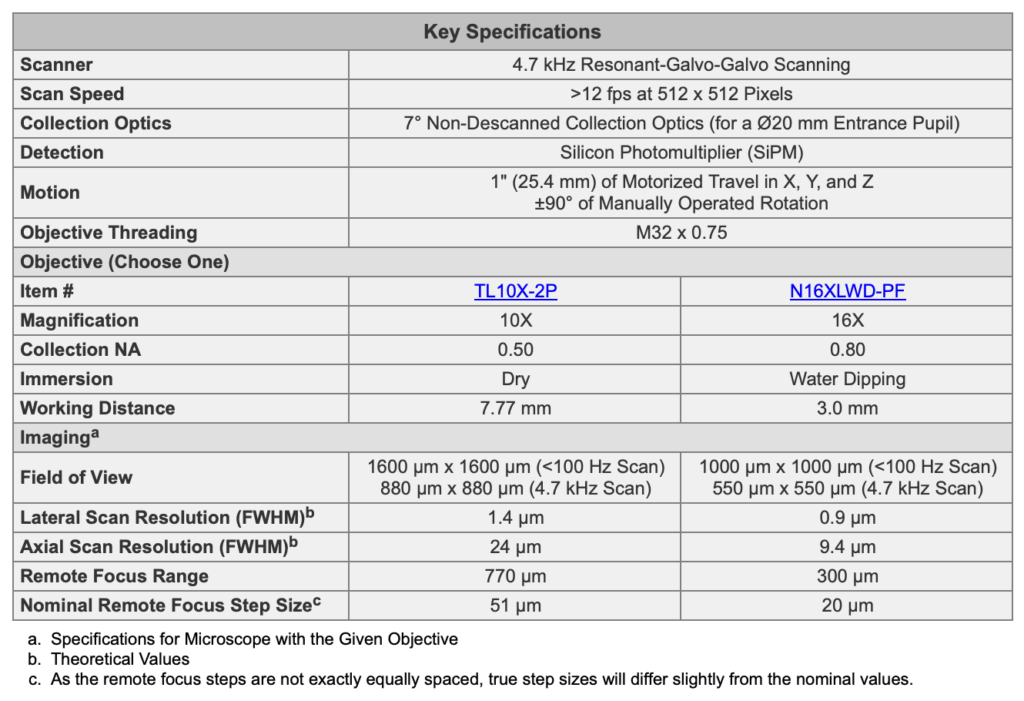

The specs are pretty good. The engineering tradeoffs are maybe clear to the aficionados. The axial resolution is a bit lower than I expected, but I’m not too worried about it. I bet it works great for neuroscience experiments. I think this is a pretty interesting and enabling product. Let me highlight the positives: the remote focus system looks pretty good and the frame rate is high (note that the scan rates listed under “field of view” seem to be mixing frame and line scan rates; the resonant scanner is 4.7 kHz, that’s the number to work from).

I know what you’re wondering: “Can I get a large field-of-view 2p system with dual independent scan engines all mounted on a robot arm?” My answer is yes.

FANUC sells a robot arm that is capable of holding the entire Diesel2p system.

Interesting idea! The resolution (theoretical value) is really a bit underwhelming. And I find it difficult to understand why a lateral resolution of 0.9 um comes with an axial resolution of 9.4 um. For a lateral resolution of 0.9 um, I would rather expect an axial resolution of 15 um or beyond (https://www.nature.com/articles/nbt899). Hard to imagine such a deviation for theoretical values …

On the one hand, I agree with you. I thought the same thing when I saw the numbers. On the other hand, of course numbers can deviate from the theoretical predictions– sometimes due to unwanted aberrations, and other times we deliberately do it (e.g., Bessel beam scanning). Maybe they’re underfilling a bit and/or have some other aberrations due to engineering tradeoffs. They’re doing a lot that’s relatively new, at least in combination: fiber delivery, MEMS mirror scanning, SiPM detection, remote focusing. It’s pretty cool. The engineering team did a nice job.

9.4 microns axial can work fine. I’ve seen systems that were working fine and giving high quality images and they just hadn’t ever measured beads– when they did, they were surprised at how poor the axial resolution was but the images still looked okay. We compared 4.4 um and 14 um head to head with temporal multiplexing and didn’t find much of a difference. Supplementary Figures 9 and 10 here: https://static-content.springer.com/esm/art%3A10.1038%2Fnbt.3594/MediaObjects/41587_2016_BFnbt3594_MOESM9_ESM.pdf If that link doesn’t work, the DOI is https://doi.org/10.1038/nbt.3594 I look forward to seeing the data from the system. I bet it will be very usable, at least with the 16x Nikon objective.

Another issue is that people don’t all measure beads the same way. Sometimes resolution is overestimated or underestimated, even when people are trying to be careful and rigorous. I want them to be apples-to-apples comparisons, and they’re often at least close to that, but there is uncertainty. Sometimes people claim that their system is diffraction limited, but then when they measure beads over their full field-of-view, they get significantly different numbers in different locations.

That’s an interesting comparison in the S9/10 figures from your Nat Biotechnology paper! It definitely makes me rethink which resolution is useful. I wouldn’t have been able to correctly guess the low- and high-resolution images or dF/F traces.

Another thing is that lower resolution results in lower 2P efficiency and higher laser power for the same fluorescence yield. (While increasing the spatial integration volume of the focused laser pulse, which compensates for this loss…)

On the other hand, the best resolution also means highest sensitivity to artifacts due to brain motion. There are plenty of reasons to justify both very high and rather lower resolution. For a recent project to image fine processes of astrocytes, I invested a lot of time to improve the resolution of my 2p microscope. Now the resolution is indeed better, but I have to discard more implants due to visible axial motion artifacts.

But coming back to the Thorlabs Prelude scope – I’m also looking forward to seeing the data generated by it. I wonder if they really want to use two such scopes simultaneously imaging both auditory and visual cortex. I was immediately reminded of the Lecoq et al. (2014) scope (https://pyramidal.stanford.edu/publications/Lecoq_2014.pdf), but the dual Prelude scope would be more flexible.

They did similar comparisons in that work. See Supplementary Figures 3 and 4.

Direct link

https://static-content.springer.com/esm/art%3A10.1038%2Fnn.3867/MediaObjects/41593_2014_BFnn3867_MOESM60_ESM.pdf

or if that doesn’t work, just go to the supplement of https://doi.org/10.1038/nn.3867

Does anyone know which scanners are being used?