Can you beat von Neumann?

Conventional, von Neumann style computation is focused on arithmetic, logic, and Boolean algebra. Switches / transistors are the relevant building blocks for those systems even at the conceptual level, and certainly at the hardware and software levels. Humankind has become spectacular at making tiny transistors.

Newer machine learning is based on neural nets and related approaches like transformers. The relevance of switches is less clear. But since humankind is great at making computing devices with switches, we typically use them for the hardware implementation anyways. When all you have is a hammer, everything looks like a nail.

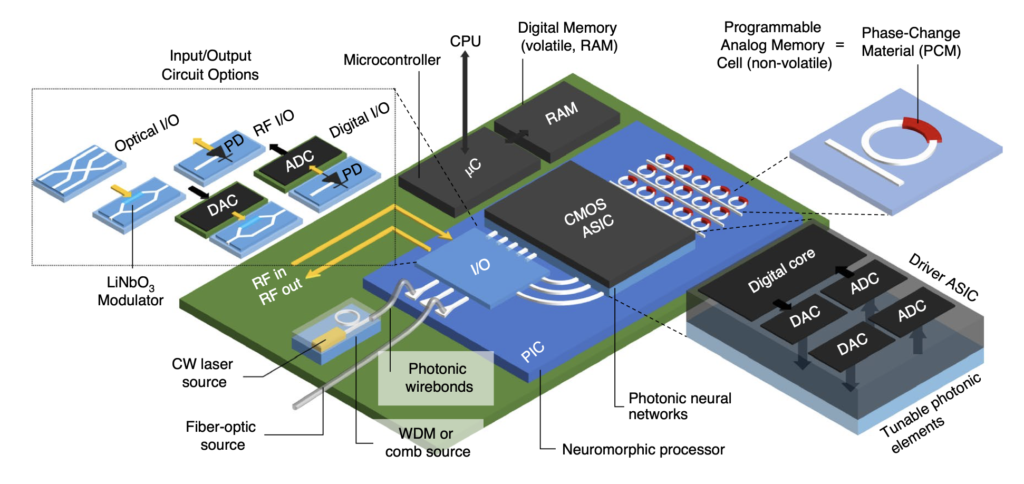

We are currently living through a Cambrian explosion of non-von Neumann-style computing approaches, including Carver Mead-style neuromorphic computing, and new nonlinear photonic devices. To have relevance beyond the lab, the technology has to offer orders of magnitude improvements over conventional approaches.

For optimization problems, there are already commercially available, non-von Neumann style approaches that win. Their overall impact depends on the market relevance of these optimization problems.

For modern machine learning, it’s not clear that any of the alternative approaches win. Compute-in-memory seems like an excellent bet, and there are some start-ups that might find success in the future. But right now, the main AI hardware company, NVIDIA (which is also the most valuable company in the world at the moment), is firmly von Neumann.

The challenge for any ML hardware startup is to outperform NVIDIA hardware for real world users. Benchmarks should be helpful, but it is so easy to game them that it sounds like marketing BS at this point. These startups need testimonials from real world users at the cutting edge saying, “I’d rather run my code on this new hardware rather than NVIDIA hardware.”