Resonant scanning with ScanImage 5

ScanImage 5 supports resonant scanning with a wide range of hardware. So custom rigs can add resonant scanning pretty easily, while sticking with ScanImage for acquisition.

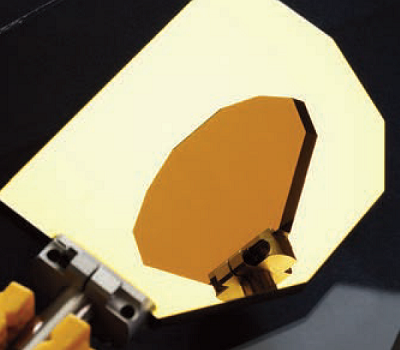

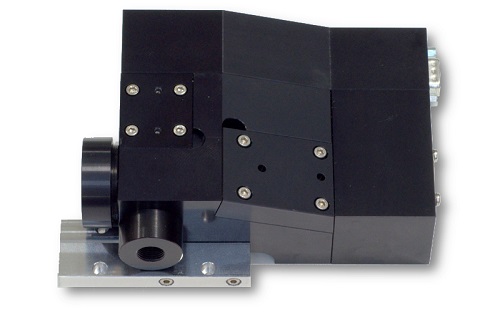

It takes about $10,000 worth of electronics from National Instruments. In addition, you’ll need the resonant and galvo scanners themselves. From Cambridge, resonant scanners are under $2000, and conventional galvos are in the same ballpark. However, resonant scanners are very loud, so they should be sealed up. You can engineer this yourself, or buy a module from Sutter or Thorlabs (this isn’t the right link, you’ll have to email them for a quote for the scan module alone). These sealed optical assemblies include the drive boards from Cambridge, and list for about 11 or 12 grand. So all told, it’s at least a $15,000 – $22,000 investment.

Of course, there are optional add-ons like a piezo objective mover, a Pockels cell, or a more powerful FPGA, and those can tack on another 10-20 grand, depending on what all you want. At least you won’t have to write your own software. Vijay and his team will handle that.

Neurolabware seem to use dedicated high-speed DAQs from Alazar Tech for fast acquisition, rather than the NI boards. Can anyone comment on the pros and cons of using either solution?

http://neurolabware.squarespace.com/accessories

http://www.alazartech.com/products/ats9440.htm

Several different approaches can work.

One could sample at a very high rate (GHz) to capture the PMT waveform and then do all of the signal processing in software. For standard 2p imaging it’s an interesting approach and would let the experimenter “re-process” the data as much as they like. This approach requires a powerful computer with a high sustained data acquisition rate.

Alternatively, outboard electronics can do the signal processing and provide lower bandwidth signals to the computer, which can be more modest. Often this in the form of a PMT amplifier with a 1 MHz cutoff filter.

Neurolabware is taking a third approach. They’re using a high bandwidth PMT amp, and syncing the sampling to the laser pulses. So there’s 1 sample per laser pulse. Some signals will be lost in this approach if sampling at lower than the laser pulse rate, and when the “late” photon events occur following a laser pulse– think of a fluorescent lifetime curve. Low delays will catch most of the events, but late-arriving fluorescence events will not be recorded if there’s only short-delay sample per laser pulse (unless the signals are pre-filtered to reduce the bandwidth somewhat).

There’s a nice discussion here:

http://scanbox.wordpress.com/2014/03/18/synchronize-to-the-laser/

Vincent Bonin and Tobias Rose join the discussion in the comments. Dario’s post makes it sound like they sample at some rate below 80 MHz, but their filter is around 80 MHz, so this suggests that they would miss events after some pulses, but maybe Dario can chime in and clarify.

Thinking of the fluorescence lifetime of a calcium indicator, there is of course a risk in using too short a time window for sampling. As photons of calcium free indicator will arrive much quicker following a laser pulse than from calcium bound indicator (~300 ps and ~3 ns for OGB-1), you will decrease your signal amplitude and mess up concentration estimations (if you are going for those).

…quantitative Ca2+ imaging is sooo pre-GECI. 😉

…but of course you are making a very valid point here. I so far did not even think about this. Good thing is that Janelia has been thorough and checked the lifetimes of various GCaMPs in their GCaMP5 paper:

Table 3:

https://www.evernote.com/l/AATBEIq6uodA45OD6YAHFD3T_aVnUhdvyN8

Regardless of pH or Ca2+ saturation the tau_lifetime usually remains at ~2.7ns. Since this did not change form GC2 to GC5 (i.e. the lack of lifetime changes, not the absolute lifetime) let’s hope this also is true for GC6.

This means it should be rather safe to tune the lock-in sampling to the baseline GC6 fluorescence or simply tune the system to a fluorophore with a much faster lifetime.

I admit I am old school: I was thinking of BAPTA based indicators. GCaMPs do seem to be “lifetime neutral”, so should be fine. FRET based GECIs on the other hand … not so much. Here you will have the wonderful situation of four fluorescence time components (two each for donor and acceptor) and the relative amplitudes of these components will be [Ca2+] dependent:

Both donor and acceptor will have a slow lifetime for the calcium free form (admittedly very dim for the acceptor). Add calcium to the mix and you get FRET. Now the donor will have a shorter lifetime. The acceptor intensity will follow a more complex time course: While the fluorescence decay itself will be unchanged, the fluorescence rise is not ‘instantaneous’ any more, but depends on the time course of the FRET process (easily hundreds of picoseconds). But I suspect this is a purely academic problem especially as calcium imaging with GECIs is not going to be overly quantitative as long as the cooperativity problem isn’t solved.

We sample at the frequency of the laser, which is close to 80MHz (in fact varies slightly with wavelength). The PMT amplifier is set at a cut-off of 80MHz, so it slightly smooths the signal… but, as we discussed here http://scanbox.wordpress.com/2014/03/18/synchronize-to-the-laser/ we adjust the phase of the sampling to give the maximum signal (or set it for the ‘early’ or ‘late’ photons, if you prefer). As pointed out others prefer to filter the PMT signal before sampling and work at lower rates, but you will be smearing the signal across each line and lose some spatial resolution. We decided we didn’t want to pay that price.

Bonin covered what I’m about to say in his comments on the ScanBox post linked to above. I’ll just elaborate a bit. Photon counting is the optimal approach, but analog sampling is fine for most microscopes. Still, all of the standard sampling principles apply: appropriate filtering is key, and if one wants to sample below the Nyquist frequency, then sampling that is synchronized to the laser pulses can help.

Dario brought up spatial resolution in a way that could potentially confuse some readers. Sampling at below 80 MHz does not, per se, decrease spatial resolution. For pixel dwell times on the order of several microseconds or more, filtering at 1-10 MHz is fine. Many ScanImage rigs operate in that regime and offer diffraction-limited spatial resolution. Or at least, the PMT signal processing and sampling isn’t limiting the resolution.

Here’s why: the movement of the excitation volume between laser pulses is << the dimensions of excitation volume itself*, plus the likelihood of a detected photon per laser pulse is often << 1. In the microscope system that Dario and Josh are selling, the non-linearity of the resonant scanning is dealt with in software. To vary the dwell time of pixels according to where they are in the scan line (short times in the middle of the scan line, and longer times at the edges), one needs access to high temporal resolution data. This is where sampling at 80 MHz helps-- it offers finer granularity for constructing pixels of with an array of dwell times. Some other systems (e.g. Thorlabs') do this with high bandwidth outboard electronics including custom FPGAs. Either approach can work fine. * For example, take a 500 um field of view, and a 12 kHz resonant scanner scanning bidirectionally, for a line time of 42 microseconds. This implies that the excitation spot is moving 500 microns in 42 microseconds, or 12 microns per microsecond. This means that in 12.5 ns (the time between laser pulses at 80 MHz), the excitation spot moves about 0.15 microns between laser pulses. Excitation volumes are often on the order of a micron (FWHM) in two-photon microscopy, at least for many in vivo GECI experiments. With smaller fields of view and/or slower scanning, the movement of the excitation volume between laser pulses becomes even smaller. A related note: http://labrigger.com/blog/2010/07/30/measuring-the-gain-of-your-imaging-system/

>> To vary the dwell time of pixels according to where they are in the scan line (short times in the middle of the scan line, and longer times at the edges), one needs access to high temporal resolution data.

Is this really true? I would rather think that the dwell time is only critical for exposure/scanning, and not for acquisition. If I get more signal closer to the turnaround points, why should I throw away these photons? Rather, I would lower the intensity of the laser using a Pockels cell at these points, but still sample at a constant frequency and maybe un-distort the image once it is acquired.

Ok, to be precise, I didn’t mean “throw away photons”, but “throw away signal” …

Hi Peter (replying here because the maximum reply depth has been hit):

The term “dwell time” suggests that the laser is parked in a particular place for a period of time, during which signal is integrated for a single pixel. In resonant scanning the user doesn’t get to set the scan rate, and the laser is always moving. So the “dwell time” is always zero and we should use a different term, like “pixel integration time”.

Your intuition is right, and lots of people (Josh and Dario included) use a Pockels cell to decrease laser power dynamically during scanning.

They’re not throwing away signal, they’re simply dynamically adjusting the pixel integration time to construct an undistorted image, just as you suggest.

However, to make an undistorted image, it’s nice to be able to adjust the pixel integration time smoothly. For example, if they always integrated each pixel for 20 microseconds, then to undistort their images, they only have integer multiples of 20 microseconds to work with because that’s how fast the data was sampled. With their higher time resolution, they can undistort their images with finer granularity.

I haven’t yet seen the Scanimage 5 code, so I can only speculate. One of the main advantages of Scanimage is to have a working system that has been tested for a long time. (This is maybe also a disadvantage; at least for Scanimage 4, the source code is so deeply interwoven, that it is difficult to modify for the beginner. This might be different for Scanimage 5.) It integrates a very nice driver library for motor controls, pockels cells, fast piezos etc. that can be rather easily modified and adapted by the user, e.g. to control an additional NI card. This modularization is really useful. E.g. you can use the NI DAQmx adapters of Scanimage (which are free) to replace the Matlab data acquisition toolbox (which is not free) for applications not related to scanimage.

A possible advantage for some uers is that Scanimage was designed to work hand in hand with the software Ephus (also from the Svoboda lab). However, as far as I know, Scanimage 5 runs only on 64 bit Matlab, and Ephus only on 32 bit by now. If anybody has more recent news, please let me know. –

Therefore, for use of both programs, two Matlab installations would be required, and the only crosstalk would be a start acquisition trigger, which .

To upgrade from a pure galvo-scanning setup, maybe some costs additional to those mentioned above come into play. For example fast PMT-preamplifiers for each channel and a high-perfomance computer with large RAID0- or SSD-disk.

Personally, I like very much the approach of Scanbox, first because of the use of a non-expensive chip (PSoC 5) for synchronizing all the events, and also because the related blog is a source of interesting information, and you can easily check out which components are used. As a little drawback, the source code of the software is not freely available in the internet, but only for those who use the software. (This might be due to the fact that in order to control the data acquisition board (Alazar 9440), you have to use a non-free software developmental kit from Alazartech, which is not expensive at all, but not for free. But this is only my speculations.)